Computer Vision in Industry

What is Computer Vision?

Computer Vision (CV) is an area of artificial intelligence that gives computers the ability to extract meaningful information from digital images. Using advanced technologies such as machine learning and deep neural networks, machines are enabled to mimic the human eye and brain. The main purpose of computer vision is to analyze data in digital images to identify objects, analyze movements, interpret complex scenes, improve images, and create 3D models from 2D images. This technology also has a wide range of uses in the industrial field. Quality control, inspection, automation, safety systems, robotic/machine vision, autonomous vehicles are involved in many application areas, such as efficiency improvement, smart factories and integrated systems. Computer vision plays a critical role in the industry to improve quality, speed up production processes, ensure safety and optimize operations. The effective use of this technology makes significant contributions to companies’ competitiveness and lower costs.

The Relationship Between Computer Vision and Image Processing

Although the fields of computer vision and image processing share similar techniques that involve working on images, they are separated by different purposes and approaches. Both fields work on digital image data and focus on the analysis and processing of images using mathematical, statistical methods. For example, techniques such as filtering, conversions, and feature extraction are widely used in both computer vision and image processing. However, image processing often involves higher-level tasks such as correcting, refining, compressing, or converting raw images, while computer vision involves extracting meaningful information from images, recognizing objects, and understanding scenes. In image processing, the result is usually a processed or improved image, while in computer vision, the result is the information extracted from the image and the use of that information to make certain decisions or perform actions. Computer vision usually requires a data set for artificial intelligence training, while image processing systems do not require a pre-trained model.

Computer Vision Operating Principle

Computer vision applications use sensing devices, artificial intelligence, machine learning and deep learning to mimic the human vision system. This process starts with acquiring images with devices such as digital cameras. The resulting images are represented as pixel matrices, and raw images are often subjected to preliminary image processing steps such as noise removal, contrast enhancement, and size change. This makes the images suitable for further analysis. Feature extraction and detection are then performed from the images. At this stage, the properties of objects and objects in the image are detected and transmitted to machine learning and deep learning models. Finally, computers use this information to perform many tasks, such as object recognition or classification, and make decisions based on the application. For example, a machine vision system running on a production line performs quality control of products. This system detects defects on the surface of products, measures their size and checks for color deviations. As a result of detected defects, the system automatically separates defective products and ensures that only products that comply with quality standards can progress on the production line.

Computer Vision in Manufacturing and industry

Computer vision simplifies product processing with technology support from manufacturers. Manufacturers, product packaging, inspection, quality control, design, they can identify images and videos through previously trained data for use in areas such as product sorting and process automation. Computer vision, a component of the digital transformation ecosystem, has the potential to offer companies a competitive advantage. Manufacturers who want to be at the forefront of change in the sector are particularly keen to adopt this technology.

- ● Quality Control:

-

- ○ Product Inspection and Error Detection:

-

-

- ◾ Computer vision is used to detect defects in products leaving production lines. For example, it can detect if the surface of the parts produced in a production line has scratches, cracks or faulty painting.

- ◾ By using high-resolution cameras and image processing algorithms, small defects on the surface of the products that are invisible to the human eye can be detected with extraordinary success.

-

-

- ○ Size and shape Control:

-

-

- ◾ Computer vision systems are used to check whether products meet certain size and shape standards. For example, in a pipe production line, computer vision can measure the diameters and thickness of each pipe produced.

- ◾ Volumetric measurements of products can also be made with 3D imaging technologies.

-

-

- ○ Color and pattern Analysis:

-

-

- ◾ Computer vision is used to check the color, pattern and other visual properties of products. For example, in the textile industry, the accuracy and color harmony of the pattern on a fabric can be examined with Computer vision.

- ◾ Helps maintain quality standards by quickly detecting color deviations or pattern errors.

-

-

- ○ Packaging check:

-

-

- ◾ The accuracy and integrity of the packaging of the products are also checked with computer vision. The bar codes on the packaging are checked that the labels are correctly placed and readable.

- ◾ Errors such as ripped, mislabelling or missing information can be detected on the packaging.

-

-

- ○ Assembly and component Control:

-

-

- ◾ Especially in the automotive and electronics industries, it is important to ensure that the components used in assembly processes are correctly positioned. Computer vision checks whether each component is in the correct position.

- ◾ It detects missing or improperly assembled parts, preventing defective products from reaching customers.

-

-

- ○ Robotic Integration:

-

-

- ◾ Computer vision, along with integrating robots into quality control processes, enables automated inspection and correction processes. For example, a robotic arm can detect faulty products with computer vision and disconnect them from the production line.

-

-

- ○ Temperature Detection with Thermal Camera:

-

-

- ◾ In systems that use ovens, quality control of the oven depending on temperature can be provided. With this application, obsolescence in the oven can be found with temperature detection.

-

-

- ○ Volume Measurement for the process industry

- ◾ In industries that operate with dust and grains, quantities produced, stored or supplied as raw materials can be measured volumetric using computer vision technology. These volume measurements allow an industry to predict its current production capacity and potential production quantity.

- ○ Volume Measurement for the process industry

- ● Process Automation:

-

- ○ Automated Production Line Control:

-

-

- ◾ Computer vision provides automated quality control by monitoring and controlling processes on production lines. This allows for continuous monitoring and optimisation of production processes without human supervision.

- ◾ For example, in automobile manufacturing, computer vision systems can automatically correct assembly errors and ensure that faulty parts are removed from the production line.

-

-

- ○ Material and Parts Classification:

-

-

- ◾ Computer vision is used to automatically classify materials used in the manufacturing process or parts produced. This ensures that different products are directed to the right places on the production line.

- ◾ For example, in a packaging facility, computer vision systems can identify products and direct them to the right packaging machines.

-

-

- ○ Robotic Guidance and Process Control:

-

-

- ◾ Computer vision provides visual guidance to robots, making it possible for them to perform certain tasks. This is especially important in operations such as precision assembly, welding or painting.

- ◾ For example, a robot arm can hold a component in the correct position thanks to computer vision and automatically perform the assembly process.

-

-

- ○ Machine Parameter Optimization:

-

-

- ◾ Industrial machines often require various parameters from the operator. Optimal parameters that will make quality control with computer vision and bring the highest quality can be easily found instantaneously in a dynamic system.

-

-

- ○ Product Tracking:

-

-

- ◾ Computer vision monitors moving objects on a production line, contributing to process automation. This technology monitors the movement of products in the production line, ensuring that specific processes are intervened at the right time.

- ◾ For example, on the conveying belts, you can monitor whether the products are in the correct position and make automatic corrections when necessary.

-

-

- ○ Warehouse and Logistics Management:

-

-

- ◾ When warehouse automation is integrated with computer vision, the processes for storing, monitoring and transporting products are optimized. Computer vision, tracking stock, locates products within the warehouse and guides transport vehicles.

- ◾ For example, robots inside a warehouse can recognize products with computer vision and place them on the right shelves or select them for orders.

-

-

- ○ Comprehensive Data Collection and Analysis:

-

-

- ◾ Computer vision collects large amounts of visual data, allowing processes to be analyzed in greater detail. This makes it easier to make data-based decisions for process improvements.

- ◾ For example, visual recording and analysis of each stage in the production process allows the identification of inefficiencies or errors in the processes.

-

- ● Security:

-

- ○ Employee Safety:

- ◾ Computer vision can be used to monitor whether employees are using personal protective equipment (PPE) correctly. It can give immediate warning when missing or incorrect use of PPE, such as helmets, glasses, gloves, etc., is detected.

- ◾ For example, in a factory, a computer vision system can detect whether employees are wearing helmets and send notifications to the manager if missing PPE is used.

- ○ Employee Safety:

-

- ○ Employee Health and Counting:

-

-

- ◾ Using security cameras and computer vision, people in a factory or office can be counted and their specific situation can be observed. They can also monitor their health and their work continuity.

-

-

- ○ Danger Zone and Access Control:

-

-

- ◾ In industrial facilities, certain areas may be hazardous and should be accessible only to authorized personnel. Computer vision can prevent security breaches by detecting intrusion in these areas.

- ◾ For example, in a chemical factory, when an unauthorized person is found to have entered the hazardous materials area, the alarm system is activated.

-

-

- ○ Machine and Equipment Safety:

-

-

- ◾ Computer vision systems can monitor whether industrial machines and equipment are operating safely. When abnormal vibrations, overheating or misuse are detected, these systems can automatically stop machines or notify the maintenance team.

- ◾ For example, on a production line, when a possible sign of malfunction is detected on the machines, the computer vision system stops the process and alerts the operators.

-

-

- ○ Fire and Smoke Detection:

-

-

- ◾ Computer vision is used for fire or smoke detection in industrial facilities. These systems, in addition to fire detectors, can detect smoke in the early stages or signs of fire.

- ◾ For example, in a warehouse, when the computer vision system detects any signs of smoke, it can automatically activate the fire alarm and alert the fire brigade.

-

-

- ○ Prevention of Work Accidents:

-

-

- ◾ Computer vision is used to predict potential work accidents in advance. Warning systems are activated when hazardous movement, incorrect equipment use or risky behaviour is detected in the work areas.

- ◾ For example, when a worker working on a production line is found to be in a dangerous position, the system can alert both the worker and the managers.

-

-

- ○ Vehicle, Forklift and Machine Safety:

-

-

- ◾ Vehicles and trucks in industrial facilities can pose serious safety risks, especially in tight areas or in areas with heavy traffic. Computer vision can be used to ensure safe movement of vehicles and trucks.

- ◾ For example, the computer vision system, which monitors the movement of trucks in a warehouse, can alert the operator to prevent a possible collision or automatically stop the vehicle.

-

-

- ○ Material and Product Safety:

-

-

- ◾ The safe handling and storage of stored materials is critical to industrial safety. Computer vision can check whether materials are stacked correctly or in a dangerous position.

- ◾ For example, in a logistics center, a computer vision system that monitors the safety of products stored on high shelves can alert in the event of unstable stacking.

-

Advantages of Computing Vision in Manufacturing and industry:

- 1. Quality control: Computer vision systems can automatically inspect the quality of products produced on the production line. These systems can detect even small errors that the human eye cannot detect, thereby improving product quality and preventing faulty products from reaching the customer.

- 2. Increasing production speed: Thanks to automation, production processes are accelerated. Machines equipped with computer vision can operate continuously without the need for human intervention, which increases production speed and increases productivity.

- 3. Cost savings: Using computer vision systems instead of human labor saves costs in the long run. Especially in high-volume production processes, automated systems make fewer errors and run faster, which reduces labor costs.

- 4. Safety and employee Health: Computer vision systems enhance employee safety by enabling automation of hazardous or demanding jobs.

- 5. Data Collection and Analysis: Computer vision systems can collect large amounts of visual data throughout the manufacturing process. This data can be analyzed to understand the root causes of production errors, optimize processes and improve future production strategies. For example, it can identify an operator who is not using the machine correctly and guide the operator.

- 6. Flexibility and adaptability: Computer vision systems can quickly adapt to changes in production processes. For example, a new product design or a change to the production line can be easily integrated with updates to the system's software.

Computer Vision General tasks

- ● Image Classification: The task of assigning an image to a specific class is to categorize products and separate defective products on automated production lines.

- ● Object Detection: The task of identifying and locating certain objects in the image, allowing the detection of intrusion on security cameras or the detection of missing components on the production line. It can also detect certain objects in 3D, and the 3D model found can be used in a variety of areas.

- ● Segmentation is the process of assigning each pixel in an image to a class and is used to detect faulty painting or assembly problems on the production line.

- ● Image production: The task of creating new images based on a specific input and is used to create new designs during the product design and prototyping phases.

- ● Image Super Resolution: The process of making a low-resolution image high-resolution, used to improve low-resolution images obtained from security cameras.

- ● Image Description: The process of creating a meaningful text description of an image, used for automatic creation of product catalogs or event reporting for security cameras.

- ● Depth Estimation: The process of estimating the distance of objects in the scene from the camera from an image or video, allowing the robotic arms to manipulate objects correctly or allow autonomous trucks to move safely.

- ● Image noise reduction: This is the process of reducing noise in an image and is used to clean production line images taken in low light conditions.

- ● Image-to-Image Translate: The process of converting an image to another style, which allows for quick simulation of different material or color variations in product design.

- ● Visual question answering: The ability to answer questions about an image and is used in factory inspection systems to provide automated information about the installation or condition of a product.

Key technologies used

Many technologies and methods have been developed to perform these basic computer vision tasks.

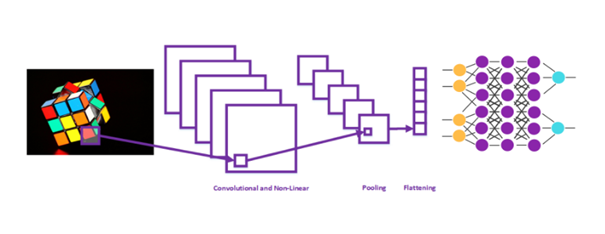

- ● Convolutional Neural Networks (CNNs):

- ○ CNNs are deep learning models used to process and make sense of image data. It is widely used in tasks such as image classification and object detection. State-of-the-art deep learning models like YOLO are built on CNN architecture. CNNs are used extensively in image processing and deep learning for many reasons.

- ◾ CNN’s are great at capturing the spatial hierarchy in images. Convolution layers form a hierarchy from low-level properties (for example, edges) to high-level properties (for example, objects). In this way, important features in images can be better defined and analyzed.

- ◾ Techniques such as max-pooling, batch-normalization, and dropout ensure that CNNs are robust against factors such as displacement and scalability. This helps the model resist the object's position and size in the image.

- ◾ CNNs learn certain features over and over again by sliding over the image using filters (kernels). This feature sharing reduces the number of parameters and makes the model more efficient. This makes it possible to train deeper networks with fewer parameters.

- ◾ CNN specializes in capturing the local correlations of images. Each convolution filter operates in a specific receptive field so that it can learn important features regardless of the position of objects.

- ◾ CNNs eliminate the need to manually extract features from images. Instead, the model automatically learns the most meaningful features during the training process. This provides a huge advantage in tasks such as image recognition, object detection and segmentation.

- ◾ CNNs perform exceptionally well on image processing and computer vision tasks. It is one of the most popular among deep learning models and usually provides higher accuracy than other methods.

- ◾ CNNs allow re-use of pre-trained models. Thanks to transfer learning, retraining a model for another task requires much less data and shorter training time.

- ◾ When CNNs are trained in large datasets, their generalization capabilities are quite powerful. This ensures that the model also performs well on new data outside of the training data.

- ○ CNNs are deep learning models used to process and make sense of image data. It is widely used in tasks such as image classification and object detection. State-of-the-art deep learning models like YOLO are built on CNN architecture. CNNs are used extensively in image processing and deep learning for many reasons.

CNN’s visualization is as below:

- ● R-CNN, Fast R-CNN ve Faster R-CNN:

Object detection is a technique used to determine the location and classes of certain objects in an image or video. R-CNN (region-based Convolutional neural Networks) and its improved versions, fast R-CNN and faster R-CNN, are among the groundbreaking methods in this field. These models have been developed to improve the speed and accuracy of object detection.

- 1. R-CNN (Region-based Convolutional Neural Networks): R-CNN is one of the first region-based approaches to object detection. R-CNN’s working principle consists of 3 steps: Zone Recommendation, Convolutional neural Network and Classification, and Bbox Regression. Since CNN needs to run repeatedly for each zone recommendation, this approach requires a lot of computation, which in turn extends the processing time.

- 2. Fast R-CNN: Fast R-CNN was developed to solve R-CNN's slowness problem. The biggest innovation of this model is that the entire image is rendered in a single CNN transition. Then, faster and more efficient actions are made on the district recommendations. Unlike R-CNN, fast R-CNN feeds the entire image to CNN at once and maps out the feature. This eliminates the need to run CNN separately for each region recommendation.

- 3. Faster R-CNN: Faster R-CNN has gone a big step further in object detection, further optimizing the region recommendation process. This model integrated the region recommendation phase into an end-to-end structure, resulting in a great improvement in speed and accuracy. Faster R-CNN’s most important innovation is the introduction of a network called RPN. RPN suggests potential object regions at each location using a floating window on the image. This network is a purely convective network that quickly generates region recommendations.

The evolution from R-CNN to faster R-CNN shows how speed and accuracy can be optimized in object detection. These models are used in many computer vision applications today and have achieved significant success, especially in tasks such as object detection and classification.

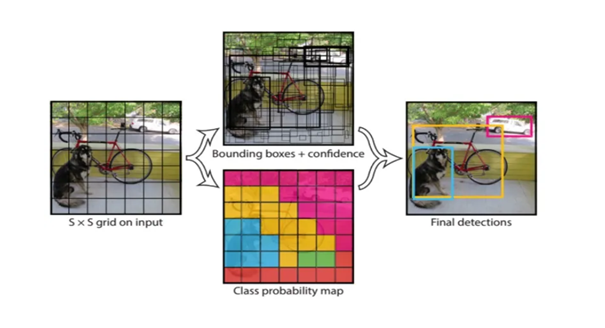

- ● YOLO (You Only Look Once):

YOLO is known as a groundbreaking model in the field of object detection and has a wide range of uses in computer vision applications. The biggest feature of YOLO is that it can perform object detection operations very quickly and efficiently in real time. Various versions of YOLO have been developed, and with each new version, performance and accuracy are further enhanced. YOLO processes an image in a single pass. During this transition, the image is divided into a certain number of cells (grid) and each cell decides whether it contains objects. If an object exists, this cell predicts the class and position of the object. The grid-based approach divides the image into a grid of SxS dimensions. Each grid cell suggests a certain number of bounding boxes and determines which class of objects these boxes belong to. You can see an example of this in the image below.

YOLO is a revolutionary model for object detection. Due to its speed and accuracy, it is preferred in many real-time applications. Each new version further enhances the power of YOLO, making it an indispensable tool in the computer vision. YOLO’s core principles and advantages make it important both in the world of research and in industrial applications.

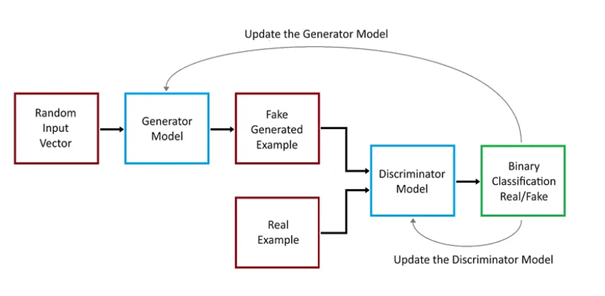

- ● Generative Adversarial Networks (GANs):

Generative adversarial Networks (GANs) is a very popular and effective type of artificial intelligence model. Invented by Ian Goodfellow and colleagues in 2014, it revolutionized the field of image creation, data augmentation, and data imitation. GANS are made up of two main components: A generator (manufacturer) and a discriminator (separator). The training of GANS takes place when these two models compete with each other like a game. While the manufacturer tries to produce data that is good enough to fool the parser, the parser tries to catch this fake data. This process continues until the manufacturer is able to generate much more realistic data. The relationship between these two models is as follows:

GANS offer a revolutionary innovation in the field of deep learning and artificial intelligence. GANS, which have opened new doors in many areas such as image creation, data augmentation and so on, can form the basis for many future applications. For example, with SRGAN, a low-resolution image can be moved to a higher resolution.

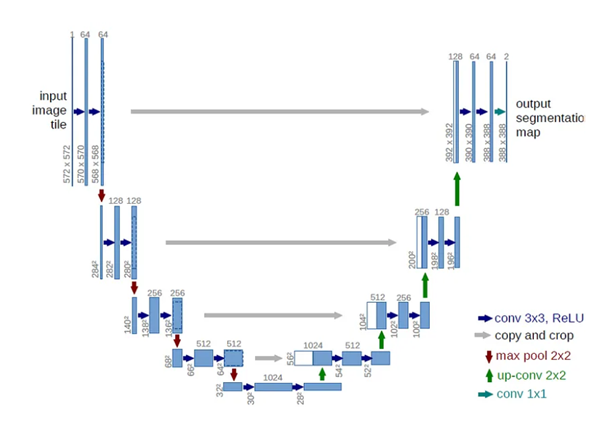

- ● U-Net:

U-Net is a neural network architecture that is particularly used in biomedical image processing and is also effective in other image segmentation tasks. Developed by Olaf Ronneberger and his team in 2015, this architecture is distinguished by its ability to achieve high accuracy results with limited training data. U-Net takes its name from its symmetrical structure, which resembles the letter "U", and consists of two main parts: The encoder and the decoder. The encoder converts the input image into a series of increasingly smaller feature maps, while the solver uses these maps to produce a pixel-by-pixel segmentation mask at the original size of the input image. The symmetrical structure of the U-Net is formed by the combination of both stages. The architecture from which U-Net gets its name is as follows:

U-Net is a revolutionary architecture in the field of image segmentation. This network structure, which has proven itself especially in the biomedical field, is also successfully applied in other image processing tasks. Thanks to its skip connections and fully convunctional structure, it can perform well even in low data environments. One of the most important articles today, segment anything, developed by Facebook, was inspired by the U-Net architecture and Transformer technology.

- ● Feature Pyramid Network (FPN), is a feature extractor that takes a single-scale image as input and produces feature maps at multiple levels, proportional sizes. This process is carried out in a purely convolutional manner and is independent of the backbone convolutional architectures used. Therefore, it functions as a general solution that can be used in tasks such as object detection to create feature pyramids within deep convulsive networks.

- ● Vision Transformer (ViT), is a model used for image classification and uses a Transformer-like architecture on parts of the image. An image is divided into fixed-size pieces; Each part is embedded linearly, position embeds are added, and the resulting vector array is fed into a standard Transformer encoder. To classify, an additional learnable "classification token" standard is applied at the end of the sequence.

- ● Residual Networks (ResNets), now learn functions by reference to layer entries, rather than learning non-referenced functions. Instead of waiting for a few layers to learn the basic map directly desired, residual networks allow these layers to learn a residual map. Resnets form a network by adding layers of residual blocks, each one of which is superimposed. For example, a ResNet-50 consists of fifty layers using these blocks.

In addition to these technologies, Mask R-CNN, DeepLab for semantic segmentation; FaceNet, DeepFace for facial recognition, OpenPose and DensePose for exposure prediction, neural Radiance fields and Gaussian Splatting methods for 3D modeling are also frequently used as important technologies.

Software and Hardware for Computed Vision

Software:

- ● Image Processing:

-

- ○ OpenCV (Open Source Computer Vision Library) is one of the most widely used open source libraries in computer vision projects. C++ can be used in languages such as Python and Java. OpenCV, image processing, video analysis, object recognition, face recognition, optical character recognition (OCR) offers functions optimized for a wide range of computer vision tasks, such as motion tracking. Thanks to its extensive community and extensive documentation, it can be used in projects from beginner to advanced.

- ● Model Building and Computer Vision Applications:

-

- ○ TensorFlow: Developed by Google, TensorFlow is one of the most popular open source libraries for building and training deep learning models. Capable of high-performance mathematical calculations, this library offers a wide set of tools for quickly building and optimizing neural networks. TensorFlow also provides APIs for low-level calculations, as well as a user-friendly experience by integrating with Keras, the higher-level API.

- ○ Keras: Keras is a user-friendly deep learning library and usually runs on back-ends such as TensorFlow or Theano. It allows users to easily create complex neural networks. Because it has a high level of abstraction, it is especially ideal for beginners or those who want to do rapid prototyping. Keras provides a simple API for intuitively identifying and training model layers.

- ○ Ultralytics: Ultralytics is an artificial intelligence and computer vision library, especially known for its YOLOv5 (you only look once) model. YOLOv5 is widely used in real-time object detection and classification tasks and is known for being fast, lightweight and highly accurate. Ultralytics provides a user-friendly interface for the development, training and distribution of this model. The library runs on PyTorch and allows users to easily train object detection models with their own datasets. Thanks to its extensive documentation and active community, Ultralytics is a powerful tool for researchers and developers and is often preferred for computer vision projects.

- ○ Although Hugging Face is a well-known company and open source platform in the field of natural language processing (NLP), it has also had a broad impact in other areas of artificial intelligence, including computer vision, in recent years. Hugging face is particularly known for Transformer-based models and enables a wide range of applications of these models. In the field of computer vision, hugging face offers pre-trained models for tasks such as image classification, object detection, image segmentation, and a simple interface to use these models. Hugging face’s libraries, such as transformers and datasets, allow researchers and developers to quickly train powerful models on large datasets. In addition, hugging face’s community-oriented approach and model-sharing platform allow users to upload their own models and take advantage of other models, making AI and computer vision projects more accessible.

- ○ PyTorch: Developed by Facebook, PyTorch is a deep learning library that stands out with a flexible and dynamic computational graph. Like TensorFlow, PyTorch is used to train large-scale deep learning models. However, PyTorch’s dynamic computational graphics and tight integration with Python make it more suitable for research and development. PyTorch accelerates model development and debugging.

- ○ Scikit-learn is one of the most popular machine learning libraries for Python. It offers a wide range of applications of basic machine learning algorithms and includes common techniques such as classification, regression, clustering, and size reduction. This library is frequently used by data scientists and researchers and also provides tools for data pre-processing and model evaluation.

- ● Data Processing, Visualization and Analysis:

-

- ○ NumPy: NumPy is a basic library for scientific calculations in Python. It provides multidimensional array objects and a large collection of mathematical functions that work on these arrays. NumPy is used as a basic building block for data processing and mathematical calculations in machine learning and data science projects. Thanks to high-efficiency array calculations, it enables fast processing of large data sets, and many machine learning libraries are based on NumPy.

- ○ Matplotlib: Matplotlib is one of the most popular libraries used for data visualization in Python. Provides versatile tools for creating graphics and charts. With matplotlib, you can create histograms, bar graphs, line graphs, and more complex visualizations. The library is very important for data analysis and visual presentation of results. Also, thanks to the customization capabilities, it is possible to detail the graphics as desired.

- ○ Seaborn is a library built on Matplotlib that makes it easier to create more aesthetic and statistical graphics. Seaborn makes working with datasets and visualizing that data more intuitive. It is especially used during data analysis and exploratory data analysis (EDA). Cross-graphs provide advanced tools for visualizing categorical data, as well as heat maps and scatter plots. Seaborn’s default style and color palettes make the visualizations more appealing.

- ○ Pandas: Pandas is a powerful library used for data manipulation and analysis. It offers table-like data structures such as "DataFrame" and "Series" objects, providing advanced functions for organizing, filtering, grouping and transforming data. Pandas makes it easy to analyze data quickly and optimize workflow, especially when working on large data sets. With features like SQL-like data queries, time series analysis, and incomplete data management, Pandas is an indispensable tool for data scientists and analysts.

Hardware:

- ● Graphics processor:

- ○ The GPU (Graphics processing Unit) is of great importance in the field of artificial intelligence and deep learning. Training and running artificial intelligence models often requires large amounts of data and complex calculations. CPUs (Central processing Unit) are optimized for general-purpose processing, while GPUs can perform thousands of operations simultaneously thanks to their parallel processing capacity. This parallel processing capability allows for faster training of artificial intelligence algorithms that work on large data sets. Especially in deep learning models, GPUs are indispensable for computational intensive operations such as matrix multiplications and tensor operations. GPUs significantly shorten the training time of models, enabling faster prototyping and application development. Therefore, advances in artificial intelligence are largely dependent on advances in GPU technology.

- ● Camera Systems:

- ○ The various types of cameras used in computer vision are optimized for different application needs.

- ◾ RGB cameras, are widely used to produce color images, while monochrome (black and white) cameras are preferred for applications that require higher contrast and sensitivity.

- ◾ Thermal cameras are used in night vision or temperature-based analysis by detecting heat differences.

- ◾ Depth cameras, on the other hand, provide distance information to detect the three-dimensional structure of objects and play a critical role in autonomous vehicles or robotic applications.

- ◾ Spectrometer cameras are used in specialized areas such as material recognition by sensing certain wavelengths of light.

- ○ Each type of camera ensures that computer vision systems are optimized for specific tasks, and therefore the right camera selection is essential to project success.

- ○ The various types of cameras used in computer vision are optimized for different application needs.

- ● Sensors:

- ○ Various sensors used in industrial applications play an important role in automation and quality control processes.

- ◾ LIDAR sensors are used for the navigation of autonomous transport vehicles (AGVs) in factory environments, which with LIDAR can map their surroundings, avoiding obstacles and dynamically adjusting their routes.

- ◾ Ultrasonic sensors are commonly used to detect the presence or lack of objects in production lines; for example, it is used to distinguish between full and empty bottles on a bottling line.

- ◾ The IMU (Inertial Measurement Unit) sensors are used to control the precise movements of the robotic arms so that millimeter accuracy can be achieved in assembly operations. These sensors monitor the direction and speed of the robots, allowing them to reliably perform complex movements.

- ◾ Thermal cameras and sensors are used to perform quality control in metallurgy or electronics production; for example, in a soldering line, thermal sensors can monitor the temperature of the soldering connections to detect errors in the manufacturing process.

- ◾ Spectrometer sensors are used for quality control of products in the food industry; for example, it analyzes certain wavelengths of light to determine the level of maturity of fruits or vegetables or the chemical components in them.

- ◾ GPS sensors are used for tracking and automatically routing materials in large logistics centers; this allows products to be quickly transported to the correct storage area.

- ◾ Lasers can guide camera systems in scanning a product and uncovering defects related to the product.

- ○ Various sensors used in industrial applications play an important role in automation and quality control processes.

-

- ○ These sensors and more are the foundation of automation and robotics, making industrial processes more efficient, safe and high-quality.

- ● Data Storage Solutions:

- ○ In industrial automation, database solutions enable computer vision systems to store, manage and quickly process large amounts of visual data. For example, computer vision systems used for quality control on production lines analyze the image of each product and store this data in the database. In stock management, cameras read the barcodes of products to update inventory and keep this information in the database. In addition, in robotic assembly lines and predictive maintenance systems, databases store visual data to monitor the performance of machines and predetermine maintenance needs. This increases the efficiency, accuracy and reliability of industrial processes.

These software and hardware enable the efficient implementation of computer vision applications and accelerate developments in this area.

References

- 1. (n.d.). What is Computer Vision? Erişim adresi https://www.ibm.com/topics/computer-vision

- 2. Microsoft Azure. (n.d.). What is computer vision? Erişim adresi https://azure.microsoft.com/en-us/resources/cloud-computing-dictionary/what-is-computer-vision#object-classification

- 3. Wang, M., & Deng, W. (2021). Deep face recognition: A survey.

- 4. Roy, D. (2021). Computer Vision Application in Industrial Automation.

- 5. (n.d.). Computer vision in manufacturing: Enhancing productivity & quality control. Retrieved August 12, 2024, from https://www.itransition.com/computer-vision/manufacturing

- 6. Hugging Face. (n.d.). Hugging Face: The AI community building the future. Retrieved August 12, 2024, from https://huggingface.co/

- 7. Papers with Code. (n.d.). Papers with Code: The latest in machine learning. Retrieved August 12, 2024, from https://paperswithcode.com/

- 8. (n.d.). PyTorch: An open source machine learning framework. Retrieved August 12, 2024, from https://pytorch.org/

- 9. (n.d.). Keras: The Python deep learning API. Retrieved August 12, 2024, from https://keras.io/

- 10. (n.d.). TensorFlow: An open-source machine learning framework. Retrieved August 12, 2024, from https://www.tensorflow.org/

- 11. (n.d.). Top Python machine learning libraries in 2023. Retrieved August 12, 2024, from https://www.coursera.org/articles/python-machine-learning-library

- 12. (n.d.). What are convolutional neural networks? Retrieved August 13, 2024, from https://www.ibm.com/topics/convolutional-neural-networks